PSYCH256:

Lesson 1 : History and Research Methods

Introduction

In this lesson, we will examine the historical events that led to the current field of cognitive psychology. In order to understand where we are today, it is helpful to understand where we have been. Cognitive psychology (at least the term "cognitive psychology") has only been around for about 40 years, although cognitive research studies have been around much longer. We will also look at how the invention of the digital computer led to new ways of thinking about the brain and cognition. Finally, we will examine the basic methods cognitive psychologists use to study the brain and the ways we interact with our environment.

Lesson Objectives

After completing this lesson, you should be able to

- identify the significant historical events and schools of psychology that influenced the foundations of cognitive psychology,

- understand how the computer analogy (and the information-processing approach) has guided thinking on human memory and cognition, and

- explain the mind and the methods that are used to study the mind.

By the end of this lesson, make sure you have completed the readings and activities found in the Lesson 1 Course Schedule.

What Is the Mind?

Welcome to the course! Throughout the next few weeks, we will be discussing one of the most interesting areas in all of psychology—cognitive psychology. I hope you enjoy the topic as much as I do!

So what is cognitive psychology? The typical definition is as follows: the scientific study of the mind. While this simple definition captures the field of cognitive psychology, you may be asking yourself, what exactly is the mind? The mind encompasses all aspects of our neurological experience, and we will be covering many important topics related to it throughout the course. For example, cognition involves neuroscience, perception, attention, memory, knowledge, imagery language, problem solving, and reasoning and decision making. We will spend time looking at each of these topics in detail.

So let’s go back to the mind. What exactly is it? The mind controls mental functions, including the areas of cognition listed above. In order to do this, the mind must use a "code" to manipulate information. The mind must represent information in order to use, store, and manipulate this information. Much of cognitive psychology studies how the brain represents information. We can make an analogy with a computer. A computer stores and manipulates information in binary code (a series of 1s and 0s). Our brain does something similar. The brain must take information from the environment (like visual or auditory information) or from within our brain (thoughts and feelings) and transform the information into a format that the brain can use. Perception, attention, memory, imagery—basically every function our brain performs depends on how the brain is representing information. Cognitive psychology is interested with understanding how these systems work by examining how this information is represented. Cognitive psychology is a relatively new field in psychology, but the study of the mind has a long intellectual tradition.

History

People have likely wondered about the mind since we first started walking on this earth. The Greeks (e.g., Socrates, Aristotle, and Plato) wondered how we gained knowledge about the world. They developed the ideas of nativism and empiricism: knowledge present at birth or gained through experience with an environment, respectively. The debate between these two ideas still exists today throughout much of psychology and are currently framed as the debate between nature and nurture. During the Renaissance, many of the world's leading intellectuals joined the discussion regarding the mind. Click through the tabs below to learn more.

One of the most influential people during this period was Rene Descartes. He proposed the mind/body problem (also referred to as dualism or the mind-body dichotomy), which makes a distinction between the physical body and the nonmaterial mind. Today this debate examines the relationship between consciousness and the brain. For example, it is easy to imagine your arm and what might happen if it gets cut. You would likely start bleeding, you could see layers of your skin, and so forth. What about this morning's breakfast? Can you remember or describe what you ate? Clearly something is going on in your brain when you have a thought, but can you tangibly create a thought? While this is an extremely interesting question, we will leave the philosophers to ponder the problem (although advances in neuroscience, covered more in Lesson 2, are shedding light on the answer.

Other philosophers entered the arena as well. John Locke revisited the debate of Plato and Aristotle and proposed tabula rasa—the idea that we enter the world with a "blank slate" (imagine a clean chalkboard) and only gain knowledge and abilities through our experiences with the world. According to Locke, nothing exists in the mind until we directly experience the world around us (filling our chalkboards with our experiences). Locke's ideas formed the basis for the behaviorist approach of the early 20th century.

The Beginning

The First Cognitive Study

Figure 1.5. Donders, Public Domain, commons.wikimedia.org

Figure 1.5. Donders, Public Domain, commons.wikimedia.org

Shortly after Weber and Fechner, a Dutch physiologist named Franciscus Donders developed a study that attempted to measure the time it takes for a human to make a decision. He devised a simple, yet clever experiment that allowed for an examination of the mental processes (at least in terms of time) that occur when making a decision. He used a two-part experiment to study decision making. In part one, he used a simple reaction-time task. Subjects would respond by pressing a button as soon as they detected a light. He assumed that the time to respond included the time to perceive the stimulus and then the time to prepare and make the response (by pushing the button). In the second part of the study, he employed a choice reaction-time task. In this case, subjects were presented two lights, one on the left or one on the right. When the left light was turned on, subjects would push a button to the left; when the right light was illuminated, they would push a button on the right. In this case, they must decide which response to make based on the incoming sensory information. In this choice reaction-time task, Donders assumed that the reaction time would include the time to perceive the stimulus along with the time to prepare and make the response. Additionally, since an individual had to make a choice of the response, the reaction time would also include the time to make a decision.

By subtracting the simple reaction-time task from the choice reaction-time task, we can estimate the time it takes to make a decision.

Figure 1.6. Equation for Estimating Decision Timing

It’s that easy! So can we study all decision making based on the results of Donder’s study? Probably not. Most of the decisions we make every day are much more complex than the decisions that were required in Donder’s tasks. We will examine the decision-making process more closely in Lesson 11 ("Reasoning and Decision Making").

The First Psychological Laboratory

Figure 1.7. Wundt & colleagues, Public Domain, commons.wikimedia.org

Figure 1.7. Wundt & colleagues, Public Domain, commons.wikimedia.org

Around the time Donders was completing his study, a German named Wilhelm Wundt was developing the method of introspection to examine how the brain represents basic sensory information. He is credited with starting the first psychological laboratory in 1879; however, the method of introspection proved to be too subjective and was abandoned in favor of other methods. Despite the lack of success with introspection, Wundt is considered one of the founders of modern psychology. The image on this page shows Wilhelm Wundt and his colleagues in the first psychology laboratory at the University of Leipzig in Germany.

The First Psychology Textbook

Figure 1.8. James, Public Domain, commons.wikimedia.org

Figure 1.8. James, Public Domain, commons.wikimedia.org

In the United States, William James wrote one of the first textbooks of psychology, Principles of Psychology, and taught the first courses in psychology. He made observations about many psychological phenomena, including much of cognitive psychology.

Behaviorism and Gestalt

Figure 1.9. Watson, Public Domain, commons.wikimedia.org

Figure 1.9. Watson, Public Domain, commons.wikimedia.org

The field of psychology was established well before the calendar turned to the 20th century (1900s), so you might expect that the next hundred or so years would bring about great advances in cognitive psychology. Unfortunately, this was not the case, particularly in the United States. The rise of behaviorism in the 1920s led to a relatively long (approximately 40 years) hiatus from the study of mental processes. The early behaviorists, particularly John Watson, attempted to bring the methodology of psychology closer to the realm of the basic sciences, such as chemistry, biology, and physics. Rather than focus on introspection, they focused their efforts on the relationship between the environment and behavior. In fact, they made a point of not attempting to examine or speculate on the inner workings of the mind.

Instead, they focused on developing methods to look specifically at learning. John Watson looked at a specific type of learning called classical conditioning. One of Watson’s students, B.F. Skinner, advanced Watson’s beliefs and developed theories and phenomenon related to operant conditioning.

Figure 1.10. Skinner, Author: Silly rabbit, commons.wikimedia.org

Figure 1.10. Skinner, Author: Silly rabbit, commons.wikimedia.org

While Skinner and Watson greatly advanced our understanding of learning, one of the central tenets in the behaviorist beliefs was that psychology should not attempt to study or reference any mental state or process that is unobservable (unfortunately, this idea basically excludes all of cognitive psychology).

In Europe, around the same time as behaviorism was making a rise in the United States, Gestalt psychology was gaining importance. Gestalt psychology provides interesting insights into our perceptual experiences. The basic tenet of Gestalt psychology is that “the whole is greater than the sum of its parts.” What this is saying in terms of Gestalt psychology is that to understand our perceptual experiences, we need to look at the whole of our perception rather than the individual parts that make up our environment. For example, take a look at Figure 1.2.

Figure 1.11. Example of Perceptual Experiences

All four figures (a–d) are composed of the same lines. Yet our perceptual experience differs drastically with each one due to how we view the entire "picture" and not each individual line. The Gestalt psychologists provided numerous examples such as these to provide support for the idea that our perceptual experience depends on the entirety of our experience and not the individual parts that make up the experience. The Gestalt psychologists proposed many rules for how we group objects but their theories and phenomena never really took off in the United States because of the rise of behaviorism.

Cognitive Revolution

The cognitive revolution grew out of a rejection of the assumptions of behaviorism. Behaviorists, remember, proposed that it is not necessary to study mental events while describing behavior. The focus of behaviorists was the study of the environment and behavior. They considered the mind as a sort of "black box"—things happen inside the box, but we don’t care (and don't need to know) what goes on inside. Instead, during the late 1940s and early 1950s, researchers realized that any explanation of human behavior and abilities must include an examination of how people mentally represent the world around them. This idea formed the basis for the cognitive revolution.

Figure 1.12. Miller, Author: Unknown, commons.wikimedia.org

Figure 1.12. Miller, Author: Unknown, commons.wikimedia.org

The historical context of the era played a role in the development and expansion of cognitive psychology (although the term "cognitive psychology" wasn’t coined until 1967 by Ulric Neisser). During World War II, human factors engineering was a field that grew rapidly. Technology quickly developed, and personnel had to be trained to use the equipment efficiently and effectively. Equipment started to be designed with the user in mind, both in terms of physical and mental abilities. Psychologists were intimately involved in the design process, and after the war ended, many of them returned to their roots as psychologists. But during the war, they had been exposed to different ways of thinking about information. They discovered that humans were processors of information, much like a computer (we’ll get to that idea in a second), with limits on the amount of information that we can process.

Researchers started to study the limits of this capacity to process information and laid the groundwork for the birth of cognitive psychology. Thus started the cognitive revolution in the 1950s. George Miller (1956) authored one of the most famous psychology papers that looked at methods to study the capacity of processing capabilities. Miller examined how many distinct things we can perceive without counting and the number of unrelated things that we can recall immediately (somewhere between 5 and 9 with an average of 7). The research was instrumental in demonstrating how cognitive processes (and their limits) could be studied and measured.

Figure 1.13. Chomsky, Author: Duncan Rawlinson commons.wikimedia.org

Figure 1.13. Chomsky, Author: Duncan Rawlinson commons.wikimedia.org

Around the same time as Miller, Noam Chomsky revolutionized the field of linguistics. Chomsky was a strong and vocal critic of B.F. Skinner and behaviorism in general. His interests lied in the acquisition, understanding, and production of language, and he felt that behaviorism was not able to offer a sufficient explanation. In his early work, he demonstrated that behaviorism cannot explain how language is used by humans (Chomsky, 1959, 1965). In particular, he looked at the grammar mistakes children make (you have probably heard many of them before: “I ranned,” “He goed,” “Look at the deers,” etc.). Behaviorism required reinforcement for learning to occur, but children certainly aren’t reinforced for these utterances (although they are cute). Instead, Chomsky proposed that our acquisition, understanding, and usage of language are related to the way the mind is constructed, particularly in the way we represent information.

Taken together, these studies demonstrated the need to look beyond behavior. The mind was slowly being brought back into the picture of mainstream psychology (at least in the United States). But there was another development that greatly influenced the rise of cognitive psychology—the digital computer.

Computers and Cognitive Revolution

In 1950, Alan Turing discussed the relationship between machines and intelligence. His influential paper set the stage for developing the metaphor between the mind and the computer. Turing devised the now famous test of intelligence, named the Turing test. The basic idea of this test is a framework to examine whether a machine has intelligence. Watch Video 1.1. for an explanation.

JEREMY, REPORTER: Very credible scientists out there are pushing A.I. as the next big thing. Even Alan Turing predicted that one day computers would have minds of their own.

To find out whether a computer was intelligent or not, Turing devised a test. It was very simple. He said that if you can have a conversation with it and believe it be human, then it must have some kind of intelligence.

[A SERIES OF IMAGES OF A COMPUTER TESTING ROOM IS SHOWN]

He even scripted an example of the kind of intelligent chats that might occur.

[DRAMATIZATION OF A MAN TALKING TO A COMPUTER]MAN: In the first line of your sonnet, which reads, "Shall I compare thee to a summer's day?" would not a spring day do as well or better?

COMPUTER: It wouldn't scan.

MAN: How about a winter's day? That would scan all right.

COMPUTER: Yes, but nobody wants to be compared to a winter's day.

MAN: Would you say that Mr. Pickwick reminded you of Christmas?

COMPUTER: In a way.

MAN: Yet Christmas is a winter's day, and I don't think that Mr. Pickwick would mind the comparison.

COMPUTER: I don't think you're serious. By a winter's day, one means a typical winter's day, rather than a special one like Christmas.

[LAUGHTER]

JEREMY: In 1950, Turing predicted that within a hundred years, we'd be able to have a conversation like that on any subject with a machine. However, there are those who say we can do it now, 50 years early.

[MUSIC PLAYING]

JEREMY, SITTING IN FRONT OF A COMPUTER: This is what they're talking about.

[A WOMAN'S FACE APPEARS ON A COMPUTER SCREEN]

JEREMY: This is Ella. Don't be fooled by the picture. She's a machine, and I'm supposed to be able to have a conversation with her. So let's give it a try. What's she saying here?

ELLA: Hello, my name's Ella. Nice to meet you. Please tell me what name I should call you.

JEREMY: Jeremy, say.

ELLA: Does being a man pay well?

JEREMY: Do you like cheese?

ELLA: I'm not so excited about cheese. A little is OK.

JEREMY: I saw the Doobie Brothers there.

ELLA: How did it look to you?

JEREMY: Not it look, they look. You've never heard of the Doobie Brothers, have you?

ELLA: What's heard of the Doobie Brothers?

[LAUGHTER]

JEREMY: You don't even know where to put apostrophes.

She's intelligent in the same way that I think my dog's intelligent. Who, what, where, why, and how—you should be a newspaper reporter. Again, let me change the subject a little. Hmm, she doesn't know, does she?

[VIDEO ENDS]

Turing set the stage for looking at the relationship between the mind and the computer. It was around this time (1950s and 1960s) that digital computer use was rising at universities across the country (there still weren’t any at home yet; most of the computers at the time took up large rooms—yes, rooms!). In fact, look at Figure 1.3 of MIT’s Whirlwind computer from 1951. It took up 3,100 square feet of floor space—bigger than many homes—and had a memory of 32 kB (32,768 bytes). Compare that amount to the new iPhone 5 on the right in Figure 1.3. The iPhone 5 has a volume of 3.31 in3 and a memory of 64 GB (68,720,000,000 bytes).

Figure 1.14. Computers in 1951 vs. 2013

Similarities

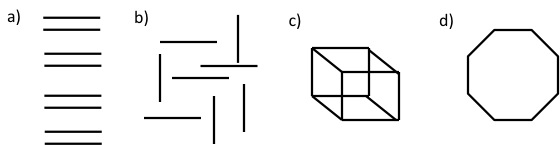

The spread of computers gave researchers another way to think about the mind. Both computers and people must use and store information. In fact, the metaphor between computers and the mind has proven quite useful over the years. For example, take a look at a flow diagram for an early computer and compare that with a diagram of an early model of attention (covered more in Lesson 4).

Figure 1.15. Early Comptuer Flow Diagram

Figure 1.15. Early Comptuer Flow Diagram

Figure 1.16. Early Model of Attention

Notice the similarity in the form of the two images. The flow diagrams that were used by computer scientists proved useful in describing the mind as well.

But the similarities between the computers and the mind go beyond diagramming how they work. Both have to code information in a way that can be used. Computers use binary code to represent information (a series of 0s and 1s). Input into a computer (from a keyboard, mouse, camera, or any other device) is coded into binary code so that the hardware and software of the computer are able to use, manipulate, and store the information. A similar transformation takes place during the output, whether the information is presented on a screen or sent to a printer or speaker.

Our minds must perform similar operations. When we perceive information from the environment, our sensory receptors must take energy from the environment (electromagnetic energy for light, sound waves for sound, etc.) and convert the energy into signals that the brain can use (action potentials). Note that we will cover the brain and action potentials in Lesson 2 and perception in Lesson 3. The brain must also represent the information in a way that allows us to connect the information from the environment with information that we have experienced before (for example, to allow us to identify objects and people). The brain has to store information so we can retrieve it later (e.g., memory, which is covered in Lessons 5–8) as well as manipulate the information so we can use it (e.g., knowledge—Lesson 9, problem solving—Lesson 12, and reasoning and decision making—Lesson 14). The representation of information is critical to our understanding of how our minds operate, and we will examine this issue throughout the remainder of the semester.

Differences

While the computer metaphor of the mind has proven useful in our understanding, the metaphor is far from perfect. A few key differences between the mind and the computer are

- active versus passive processing and

- serial versus parallel processing.

While active versus passive processing might not be the best way to describe the problem, humans have the ability to learn great amounts of information. Currently, computers are limited in the ways that they can process information; everything must be programmed into a computer. The transformations that computers make are based on code written by developers. You could make the argument that humans are based on code as well, but we are able to handle and interact with our environment in a much more complex fashion. Additionally, most current computers (although this is slowly changing) are designed to run serially (one step must be completed before another can be started). Humans on the other hand, are able to run in parallel (with many processes running at the same time). These differences have large implications for the way that we handle and represent information from our environment but also limit the extent to which computers can be used as an analogy to our minds.

Methods in Cognition

Throughout the course, we will look at different empirical studies of cognition, and it will be helpful to have a general understanding of the types of studies that cognitive psychologists conduct. While this discussion will not be an exhaustive list of all possible methods and studies, it will give you a general framework for understanding the research studies we will discuss throughout the remainder of the course. The four major methodologies we will discuss are the experiment, naturalistic observations, controlled observations, and investigations of neural underpinnings. Click on each heading to read more.

The Experiment

By far the most common approach to understanding cognition is through the experiment. In an experiment, a researchers manipulates one or more independent variables (sometimes these are referred to as conditions) and measures how this manipulation changes something in the environment (called the dependent variable). If the experiment is designed in the right way (although a perfect design is rarely, if ever, attainable), researchers can conclude that changes in our dependent variable were caused by manipulations of the independent variable.

The experiment has proven to be a very powerful method in understanding cognition. The experiment has a distinct advantage in that it allows for much more control over the other methods. Oftentimes (although not always) experiments are performed in the laboratory, where factors in the experiment can be precisely controlled. In fact, control is one of the distinguishing features of the experiment. When designing the study, the researcher wants to control as many things as possible. This control allows for the strongest conclusions to be drawn.

The experiment has a long history in cognitive psychology. In fact, the experiment has been used since the dawn of psychology to examine psychological phenomena. Gustav Fechner (discussed earlier in this lesson) developed experimental methods to study the relationship between the environment and our perceptual experiences. He carefully studied the relationship between sensory experience and the strength of stimuli in the environment. One of the methods he developed, the method of constant stimuli, is frequently used today. The method of constant stimuli can be used in a variety of designs and is particularly good at measuring thresholds, which are the minimum amounts of energy required to detect a stimulus is present or to the minimum difference required to detect a difference between two stimuli. The practice CogLab assignment this week involves a study that uses the method of constant stimuli to measure the strength of the Müller-Lyer illusion (see the Assignments page in this lesson).

Naturalistic Observations

Naturalistic observations consist of an observer watching people interact with their environment in real-life contexts. For example, researchers may be interested in how users interact with a new self-checkout machine at the local grocery store. (Yes, this is one of the things that cognitive psychologists study—the design of machines and interfaces to allow for efficient cognitive use.) A researcher may watch customers as they scan, pay for, and bag their goods.

The advantage of naturalistic observations is that they represent what goes on in the real world (unlike traditional experiments completed in the laboratory). Also, researchers can study how cognitive processes work in natural settings. For example, researchers may look at the flexibility of the processes, the complexity of the behaviors, and how they are affected by changes in the environment.

The disadvantage of naturalistic observations is that the researcher loses control over the environment. It becomes much more difficult to isolate causes and effects. For example, in the self-checkout lane, people may have varying amounts of experience, the machine could be slow that day, or any other of a number of factors could influence how the users interact with the machine.

Controlled Observations

Controlled observations, as the name suggests, allows the researchers to establish more control over the observations. For example, participants may be selected on the basis of some characteristic, different instructions may be provided for certain participants, or certain information may be displayed for some participants. The observations are often still conducted in the real world, but the researcher tries to influence the process. This influence allows the researcher to draw stronger conclusions and test a certain hypothesis. Typically, the amount of control afforded to the researcher in a controlled observation is less than what we would see in a traditional experiment in the laboratory.

Neural Underpinnings

With the technological advances in the past 50–60 years, cognitive psychologists can now look inside the brain (to a certain degree) while a person is alive and well. Much of the work in cognitive neuroscience focuses on the study of the brain and the neural underpinnings of our behavior. For example, neuroscientists might look for areas of the brain that are involved with memory or speech or discover which neurons are active when we make decisions. The discoveries from cognitive neuroscience have revolutionized our understanding of cognitive psychology. We won't spend much time talking about these methods in this lesson; the entirety of Lesson 2 is devoted to cognitive neuroscience.

Summary

Now we have an idea of how we define and think about the mind; hopefully, we at least have the foundations. The rest of the course will be an exploration of the mind in various aspects of our lives. We have discussed the history of psychology and the influences of early biologists and philosophers in the field. We discussed the major schools of psychology and examined how they influenced the development of cognitive psychology. We also studied how the invention of the digital computer changed our way of thinking about the brain. Finally, we looked at the methods cognitive psychologists use to study the mind and the brain.

References

Chomsky, N. (1959). A review of BF Skinner's verbal behavior. Language, 35(1), 26–58.

Chomsky, N. (1965). Aspects of the theory of syntax (Vol. 119). MIT Press.

Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63(2), 81–97.

Turing, A. M. (1950). Computing machinery and intelligence. Mind, 59(236), 433–460.